We recently bought a new Proliant server for running performance tests and now we can dedicate one server for testing only. The problem was defining and setting up an optimal test environment on said server.

Our requirements for the test environment are:

- Stable – you get the same results each time if a tested application performance is the same

- Easy to deploy and use when needed

- Low overhead to illustrate real application performance as close as possible

Defining the Test Environment

Our test server does not have the state of art processor but it is good enough and it has lots of memory. We try to keep everything that is needed for testing on the same server. Therefore, we looked at a few different virtualization techniques.

VMWare vSphere

We use vSphere for everything and everyone is familiar with it. There is no life without it.

Pros

- Quick to install

- We have a good knowledge of vSphere

- Easy to customize

Cons

- Pro version is expensive for infrequent use

- Free version limits performance

Docker

Docker is an open source software which wraps up a software in a complete filesystem that contains everything it needs to run: code, runtime, system tools, system libraries – everything you need. Small is beautiful.

Pros

- Doesn’t limit performance

- One file defines the complete system

- Small learning curve

Cons

- Does not isolate the container environment from the host system

KVM

KVM is a Linux based open source hypervisor and it is the most widely deployed open source hypervisor. Heavy duty mature virtualization.

Pros

- Doesn’t limit performance

- Isolates the guest system from the host system

Cons

- Frustrating installation process

- Tricky to customize

Selected Environment

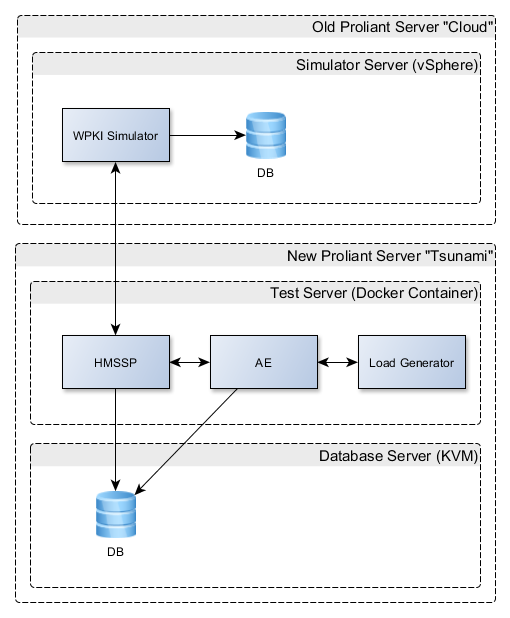

We started by creating a KVM for the databases. It proved fairly difficult to install, so we decided not to use it for the MSSPs.

Instead we set up a docker container for the MSSP system and an existing VMWare instance for the simulator. The final system looks like this:

Summary

In the end we used all three virtualization techniques. This was definitely not part of the original plan but it works well for us. All in all setting up the environment and running the performance tests took us about 5 days.